PHH, as I will call it, is an extremely important determinant of outcomes in a small subgroup of preterm infants. Infants with severe IVH who don’t develop PHH have outcomes that are little affected. As our group reviewed, even grade 4 IVH, if unilateral, and affecting 1 or 2 of the Bassan zones, has little impact on motor or cognitive outcomes unless complicated by PHH.

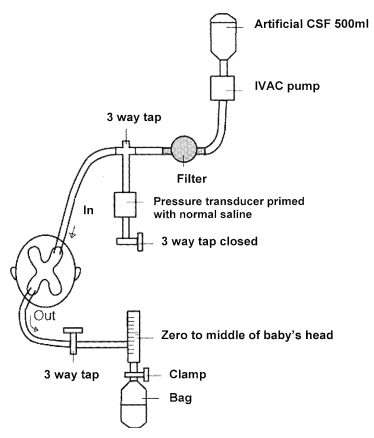

Andrew Whitelaw died recently (a touching eulogy is on the site of the Newborn Brain Society), I knew him personally, having worked as his resident during a summer in Jersey (Channel Isles, UK), when he covered for my consultant during their vacation. I remember meeting him on the beach with a surf board under his arm, and a pager tucked in his wet suit! Andy was interested in PHH throughout his productive career, and led some important trials in its management, he was the PI of the Ventriculomegaly Trial Group for their pivotal trial, Ventriculomegaly Trial Group. Randomised trial of early tapping in neonatal posthaemorrhagic ventricular dilatation. Arch Dis Child. 1990;65:3–10. That trial did not show any real benefit of routine early LP (or ventricular tapping) compared to “conservative” management of PHH. Of interest, the indications for permanent shunting were typical of the time it was done, “failure to control head size“, which clearly implies rather late intervention. Andy was also critically involved in the development of DRIFT treatment of PHH (DRainage, Irrigation and Fibrinolytic Therapy) for infants with bilateral ventricular enlargement to more than 4mm over the 97%le This involved inserting a ventricular reservoir, then injecting tPA into the ventricles, then perfusing the lateral ventricles with a solution designed to resemble CSF.

Although this invasive therapy has not been widely adopted, it did show improved long term outcomes in a small RCT.

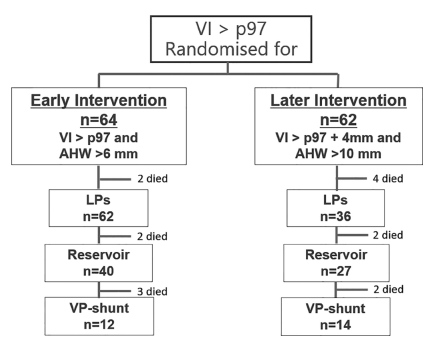

More recently the ELVIS trial (Early versus Late Ventricular Intervention Study, in which Andy was a collaborator) showed improved 2 year outcomes in infants in whom intervention was started early. The protocol for the early intervention group included a trial period of lumbar punctures (maximum of 3) followed by reservoir placement with repeated drainage until CSF accumulation stopped or a shunt was inserted. The primary outcome for ELVIS was survival or needing a permanent VP shunt, which was not different between the groups. But, to be honest I don’t think there was any real chance that survival would be affected by earlier intervention, and, re-reading the original publication, I realize that it is difficult to figure out what the primary outcome rate was in each group, as it is never stated in the text, but from the figure, it looks like it was 19/64 early intervention babies (7 deaths and 12 VP shunts) and 22/62 in the late group (8 deaths and 14 shunts).

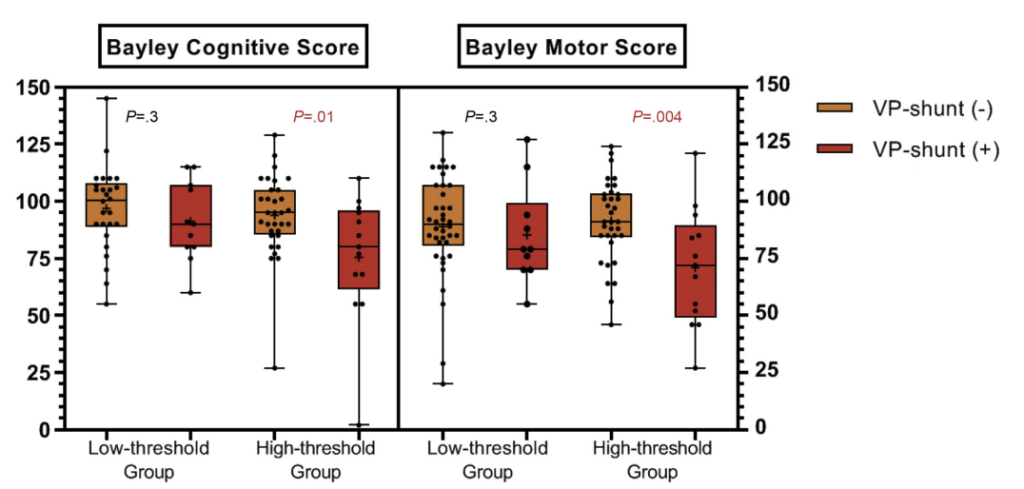

The 2 year outcomes, (Cizmeci MN, et al. Randomized Controlled Early versus Late Ventricular Intervention Study in Posthemorrhagic Ventricular Dilatation: Outcome at 2 Years. J Pediatr. 2020;226:28–35 e3) however show definite benefit in the early treatment group.

The adjusted OR for adverse outcome (death or any grade of CP or Bayley (version 2 or 3) motor or cognitive score <-2SD) was 0.24; 95% CI, 0.07- 0.87.

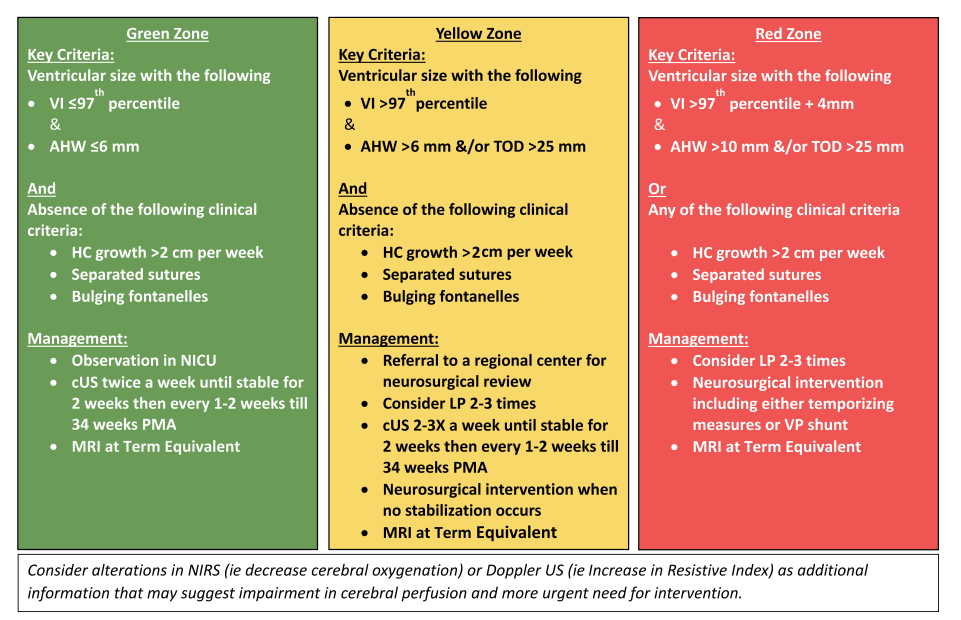

Based on these trial results and other observational data, we developed an intervention protocol which follows best practice, and is similar to suggestions in a fairly recent review (El-Dib M, et al. Management of Post-hemorrhagic Ventricular Dilatation in the Infant Born Preterm. J Pediatr. 2020;226:16–27 e3)

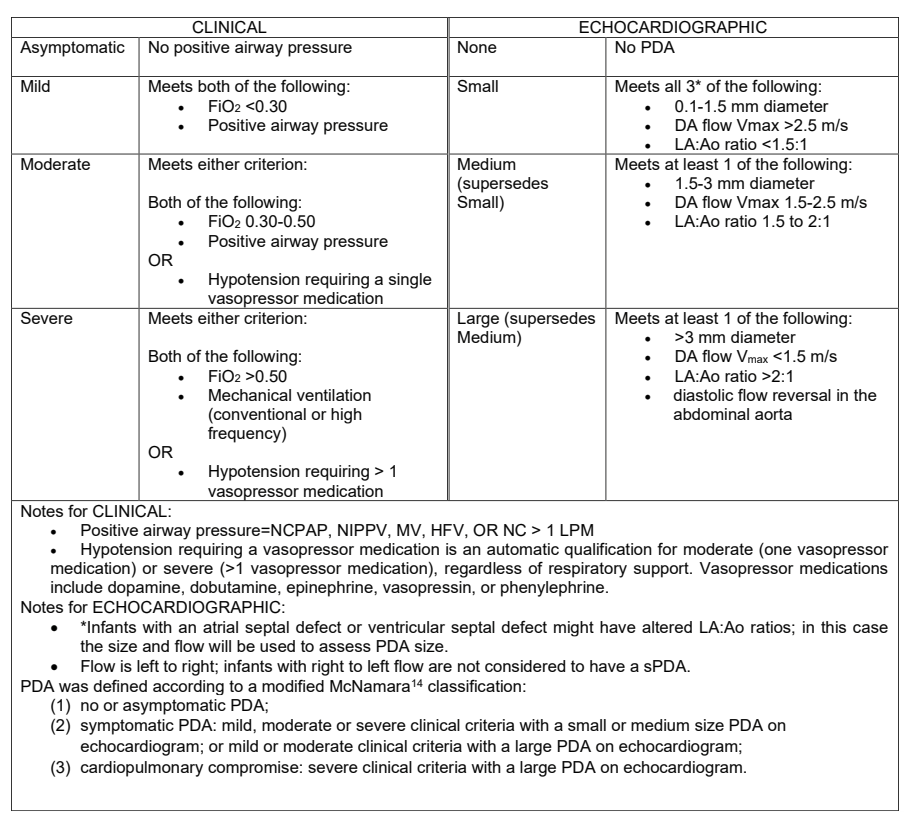

We use criteria very similar to the Yellow zone criteria to start LP attempts, with neurosurgical intervention if dilatation is persistent or progressive according to clear criteria.

One thing I appreciate about the group where I work is our production, and following of, such clinical protocols. We have large numbers of such protocols in every area of neonatal care, and try to ensure that they are followed unless there is a good clinical reason in an individual case for deviating. For such a difficult decision as this, requiring an understanding of the literature, the prognosis, and the therapeutic options, I can’t imagine nowadays trying to make a decision for each infant without the guidance of such a protocol. Which is why I was surprised that so many centres do not have such a protocol.

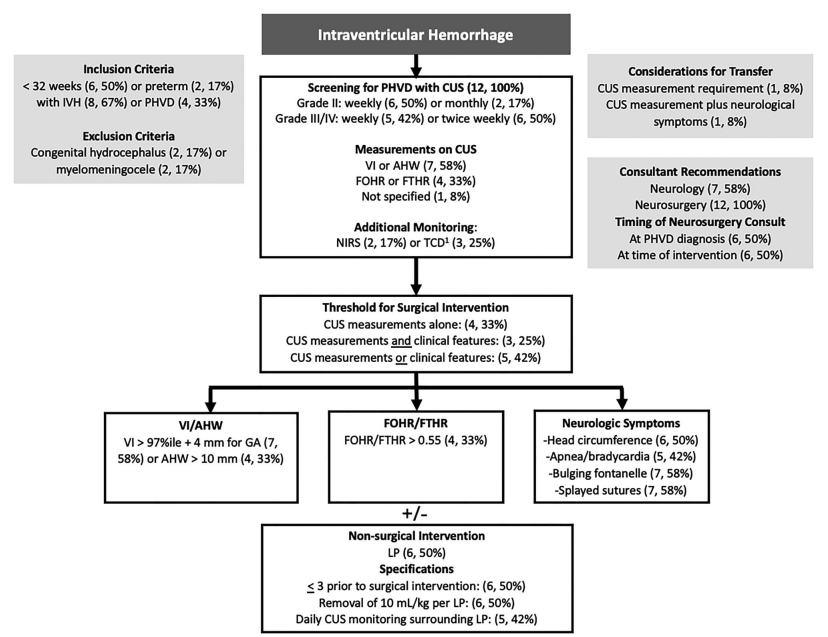

Coletti K, et al. Post-hemorrhagic ventricular dilatation: Comparison of management pathways among North American level IV NICUs. J Perinatol 2026. This article was from the Children’s Hospitals Neonatal Consortium, of mostly US NICUs (plus Toronto Sick Kids). Among the 40 NICUs that replied, only 12 had a written guideline, another 17 said they had a consensus. I find it hard to imagine what you would do in a NICU without a guideline: “if it’s Monday we intervene at at anterior horn width of 8 mm, but tomorrow it will be 6 mm (unless its the other neurosurgeon)”? Come on guys! What do you say to the parents? “We don’t know what to do, so we toss a coin”?

Even among those few with a guideline, there was a lot of variation, and most seem to have quite late thresholds for surgical intervention:

Mahaney KB, et al. Wide variation in death rates and post-hemorrhagic hydrocephalus (PHH) treatment in preterm severe intraventricular hemorrhage (IVH). J Perinatol 2026. These data from California show wide variation in the approaches to PHH, with many babies not having temporary interventions with reservoirs or subgaleal shunts prior to definitive VP shunting, which strongly suggests late intervention in most such babies. There were about 2000 deaths among about 5,500 babies with severe IVH, another 587 had a permanent VP shunt, 268 of them following a temporizing neurosurgical intervention. Another 89 had a temporary procedure and never had a shunt, either because they died, (n=16) or because, I suppose, it resolved or stabilized.

The evidence supporting early intervention for PHH is limited, it is true. If you want to be critical, you could argue that the improvement in the long-term outcomes in ELVIS is only statistically significant after adjustment for other risk factors. But even if that is your argument, it is important to come to a local, clearly-defined consensus. You also should take into account the observational data, comparing early to late intervention, such as this study comparing the Toronto Sick Kids results (at the time, a late approach) to results from Holland with an early intervention protocol, which was similar to that suggested by El-Dib and colleagues (Leijser LM, et al. Posthemorrhagic ventricular dilatation in preterm infants: When best to intervene? Neurology. 2018;90(8):e698–e706). The early intervention babies in that study had excellent outcomes, similar to babies without IVH.

At each stage in an ideal protocol there are important decisions to be made, to be discussed with parents: a trial of lumbar punctures; how many and when to to stop LPs; when to proceed to temporizing intervention; whether to insert an Omaya reservoir or a Ventriculo-Subgaleal shunt (which may be as much an institutional decision as a personalized one); and when to decide on definitive shunting, which will usually be a Ventriculo-Peritoneal shunt.

I don’t know how a parent could make most of these decisions without clear guidance from a treatment pathway agreed by all involved parties, especially neonatology and neurosurgery.

For babies without contra-indications, and especially for those without a major parenchymal injury, a protocol following the broad outlines of the El-Dib guidance referenced above will minimize secondary injury caused by ventricular dilatation. Of importance, there is no requirement for clinical signs of intracranial hypertension before intervention being indicated. Such signs occur very late in preterm infants, in the “Red Zone”, and should be avoided whenever possible.